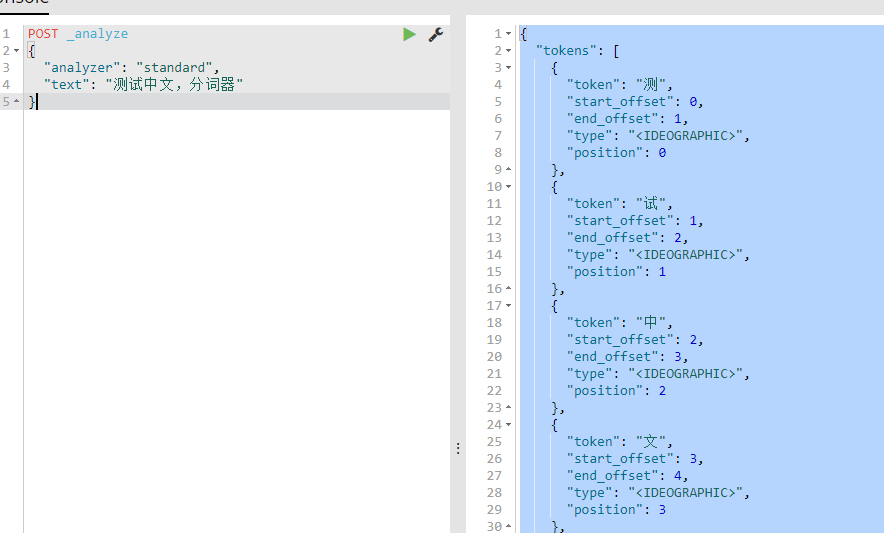

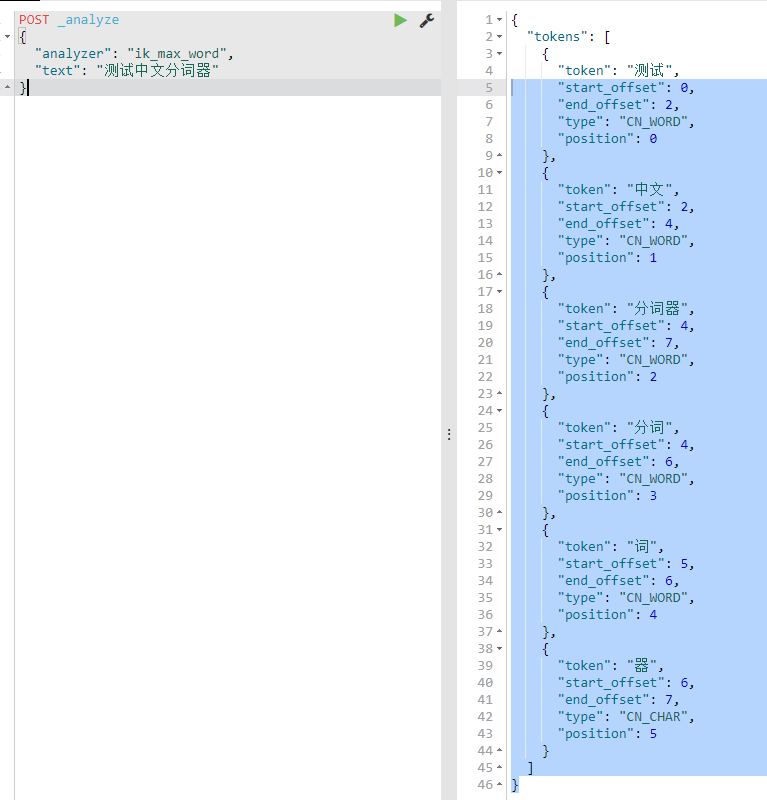

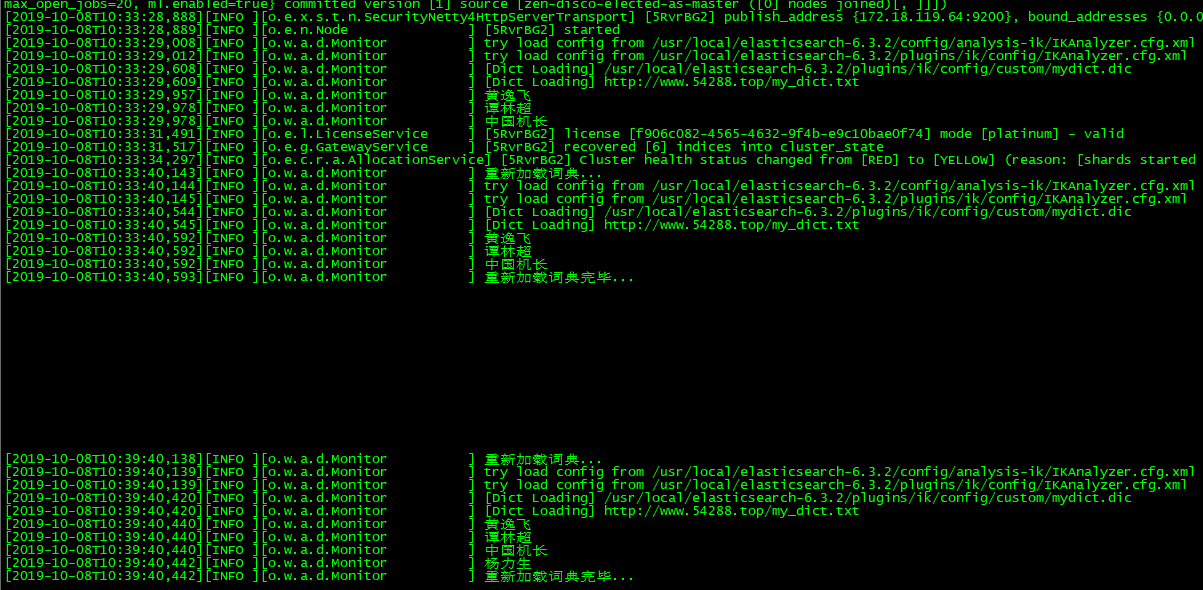

希望长大对我而言,是可以做更多想做的事,而不是被迫做更多不想做的事...... 首页 Elasticsearch之IKAnalyzer 丁D 学无止境 2019-09-12 10:19 114235已阅读 中文分词器 IKAnalyzer 摘要本文讲解的Elasticsearch的中文分词器IKAnalyzer,动态加载热词。 ##中文分词器----IKAnalyzer [参考文章](https://www.cnblogs.com/zyrblog/p/9830624.html) ```js 我们之前Elasticsearch(一)~Elasticsearch(六)都在学习知识点,所有的字段的值都是用英文的, 但是实际工作中,使用的基本上中文,但是Elasticsearch自带的分词器效果都不好。 elasticsearch提供了几个内置的分词器:standard analyzer(标准分词器)、 simple analyzer(简单分词器)、whitespace analyzer(空格分词器)、 language analyzer(语言分词器),而如果我们不指定分词器类型的话, elasticsearch默认是使用标准分词器的。 ``` ```js POST _analyze { "analyzer": "分词器类型", "text": "测试中文分词器" } ```  通过测试结果我们可以发现,使用标准分词器的分词结果,是去掉标点符号,然后一个一个字符来分词,这就是我们上一章提到的中文搜索的问题,这显然不是我们想要的分词效果,接下来我们来看中文分词器。 **安装** ```js 直接下载 https://github.com/medcl/elasticsearch-analysis-ik/releases https://github.com/348786639/elasticsearch-analysis-ik (1)下载安装,要和es版本对应,我们这里使用的是5.2.0 git clone -b v5.2.0 git@github.com:medcl/elasticsearch-analysis-ik.git (2)mvn package (3)cd /usr/local/elasticsearch-5.2.0/plugins/ (4)mkdir ik (5)copy and unzip target/releases/elasticsearch-analysis-ik-{version}.zip to your-es-root/plugins/ik (6)restart elasticsearch ``` ```js 创建一个index,并指定mapping PUT /ik_index { "mappings": { "ik_type":{ "_all": { "analyzer": "ik_max_word", "search_analyzer": "ik_max_word", "term_vector": "no", "store": "false" }, "properties": { "content": { "type": "text", "analyzer": "ik_max_word", "search_analyzer": "ik_max_word", "include_in_all": "true", "boost": 8 } } } } } 插入数据 PUT /ik_index/ik_type/1 { "content":"测试中文分词器" } 查看分词效果,见下图 查询效果----不可以查到 GET /ik_index/ik_type/_search { "query": { "match": { "content": "测" } } } 查询效果----可以查到 GET /ik_index/ik_type/_search { "query": { "match": { "content": "测试" } } } ```  **动态加载热词** ```js 每年网上都会出现一些新的流行词,电影等 比如,攀登者,网红,蓝瘦香菇然而我们都不想让他被分词,这时候我们就需要自定义我们的词库了.我们有两种方案来实现 1.通过配置文件,需要重新启动才生效. 2.通过配置远程文件词典,来实现热更新(不用重启es) 3.修改ik的源码将热词配置在mysql中(不用重启es) ik 配置文件地址es/plugins/ik/config IKAnalyzer.cfg.xml:用来配置自定义词库 main.dic:ik原生内置的中文词库,总共有27万多条,只要是这些单词,都会被分在一起 quantifier.dic:放了一些单位相关的词 suffix.dic:放了一些后缀 surname.dic:中国的姓氏 stopword.dic:英文停用词 ik原生最重要的两个配置文件 main.dic:包含了原生的中文词语,会按照这个里面的词语去分词 stopword.dic:包含了英文的停用词 停用词 a the and at but 我们通过自定义文件来实现自定义热词 <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 --> <entry key="ext_dict">custom/mydict.dic</entry> <!--用户可以在这里配置自己的扩展停止词字典--> <entry key="ext_stopwords"></entry> <!--用户可以在这里配置远程扩展字典 --> <!-- <entry key="remote_ext_dict">words_location</entry> --> <!--用户可以在这里配置远程扩展停止词字典--> <!-- <entry key="remote_ext_stopwords">words_location</entry> --> </properties> 如果有多个可以配置成 <entry key="ext_dict">custom/mydict.dic;xxxx.dic</entry> 这里配置了 /usr/local/elasticsearch-6.3.2/plugins/ik/config/custom [es@iZwz9278r1bks3b80puk6fZ custom]$ cat mydict.dic 许金锭 网红 醉品 攀登者 蓝瘦香菇 [es@iZwz9278r1bks3b80puk6fZ custom]$ ^C post 54288.top:9200/_analyze { "analyzer":"ik_max_word", "text":"许金锭喜欢看攀登者蓝瘦香菇" } 下面两次查询对比 许金锭和蓝瘦香菇 { "tokens": [ { "token": "许", "start_offset": 0, "end_offset": 1, "type": "CN_CHAR", "position": 0 }, { "token": "金锭", "start_offset": 1, "end_offset": 3, "type": "CN_WORD", "position": 1 }, { "token": "喜欢", "start_offset": 3, "end_offset": 5, "type": "CN_WORD", "position": 2 }, { "token": "看", "start_offset": 5, "end_offset": 6, "type": "CN_CHAR", "position": 3 }, { "token": "攀登者", "start_offset": 6, "end_offset": 9, "type": "CN_WORD", "position": 4 }, { "token": "攀登", "start_offset": 6, "end_offset": 8, "type": "CN_WORD", "position": 5 }, { "token": "者", "start_offset": 8, "end_offset": 9, "type": "CN_CHAR", "position": 6 }, { "token": "蓝", "start_offset": 9, "end_offset": 10, "type": "CN_CHAR", "position": 7 }, { "token": "瘦", "start_offset": 10, "end_offset": 11, "type": "CN_CHAR", "position": 8 }, { "token": "香菇", "start_offset": 11, "end_offset": 13, "type": "CN_WORD", "position": 9 } ] } post 54288.top:9200/_analyze { "tokens": [ { "token": "许金锭", "start_offset": 0, "end_offset": 3, "type": "CN_WORD", "position": 0 }, { "token": "金锭", "start_offset": 1, "end_offset": 3, "type": "CN_WORD", "position": 1 }, { "token": "喜欢", "start_offset": 3, "end_offset": 5, "type": "CN_WORD", "position": 2 }, { "token": "看", "start_offset": 5, "end_offset": 6, "type": "CN_CHAR", "position": 3 }, { "token": "攀登者", "start_offset": 6, "end_offset": 9, "type": "CN_WORD", "position": 4 }, { "token": "攀登", "start_offset": 6, "end_offset": 8, "type": "CN_WORD", "position": 5 }, { "token": "者", "start_offset": 8, "end_offset": 9, "type": "CN_CHAR", "position": 6 }, { "token": "蓝瘦香菇", "start_offset": 9, "end_offset": 13, "type": "CN_WORD", "position": 7 }, { "token": "香菇", "start_offset": 11, "end_offset": 13, "type": "CN_WORD", "position": 8 } ] } 使用远程文件热更新 POST 54288.top:9200/_analyze { "analyzer":"ik_max_word", "text":"黄逸飞和谭林超一起去看中国机长" } 效果 { "tokens": [ { "token": "黄", "start_offset": 0, "end_offset": 1, "type": "CN_CHAR", "position": 0 }, { "token": "逸", "start_offset": 1, "end_offset": 2, "type": "CN_CHAR", "position": 1 }, { "token": "飞", "start_offset": 2, "end_offset": 3, "type": "CN_CHAR", "position": 2 }, { "token": "和", "start_offset": 3, "end_offset": 4, "type": "CN_CHAR", "position": 3 }, { "token": "谭", "start_offset": 4, "end_offset": 5, "type": "CN_CHAR", "position": 4 }, { "token": "林", "start_offset": 5, "end_offset": 6, "type": "CN_CHAR", "position": 5 }, { "token": "超一", "start_offset": 6, "end_offset": 8, "type": "CN_WORD", "position": 6 }, { "token": "一起", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 7 }, { "token": "一", "start_offset": 7, "end_offset": 8, "type": "TYPE_CNUM", "position": 8 }, { "token": "起", "start_offset": 8, "end_offset": 9, "type": "COUNT", "position": 9 }, { "token": "去看", "start_offset": 9, "end_offset": 11, "type": "CN_WORD", "position": 10 }, { "token": "看中", "start_offset": 10, "end_offset": 12, "type": "CN_WORD", "position": 11 }, { "token": "中国", "start_offset": 11, "end_offset": 13, "type": "CN_WORD", "position": 12 }, { "token": "机长", "start_offset": 13, "end_offset": 15, "type": "CN_WORD", "position": 13 } ] } 1.新建词典文件 my_dict.txt(UTF8 编码),放在服务器根目录下,比较好访问 [root@iZwz9278r1bks3b80puk6fZ blog]# cat my_dict.txt 黄逸飞 谭林超 中国机长 [root@iZwz9278r1bks3b80puk6fZ blog]# 2.修改配置文件,remote_ext_dict配置的是一个url, vim IKAnalyzer.cfg.xml <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd"> <properties> <comment>IK Analyzer 扩展配置</comment> <!--用户可以在这里配置自己的扩展字典 --> <entry key="ext_dict">custom/mydict.dic</entry> <!--用户可以在这里配置自己的扩展停止词字典--> <entry key="ext_stopwords"></entry> <!--用户可以在这里配置远程扩展字典 --> <entry key="remote_ext_dict">http://www.54288.top/my_dict.txt</entry> <!--用户可以在这里配置远程扩展停止词字典--> <!-- <entry key="remote_ext_stopwords">words_location</entry> --> </properties> { "tokens": [ { "token": "黄逸飞", "start_offset": 0, "end_offset": 3, "type": "CN_WORD", "position": 0 }, { "token": "和", "start_offset": 3, "end_offset": 4, "type": "CN_CHAR", "position": 1 }, { "token": "谭林超", "start_offset": 4, "end_offset": 7, "type": "CN_WORD", "position": 2 }, { "token": "超一", "start_offset": 6, "end_offset": 8, "type": "CN_WORD", "position": 3 }, { "token": "一起", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 4 }, { "token": "一", "start_offset": 7, "end_offset": 8, "type": "TYPE_CNUM", "position": 5 }, { "token": "起", "start_offset": 8, "end_offset": 9, "type": "COUNT", "position": 6 }, { "token": "去看", "start_offset": 9, "end_offset": 11, "type": "CN_WORD", "position": 7 }, { "token": "看中", "start_offset": 10, "end_offset": 12, "type": "CN_WORD", "position": 8 }, { "token": "中国机长", "start_offset": 11, "end_offset": 15, "type": "CN_WORD", "position": 9 }, { "token": "中国", "start_offset": 11, "end_offset": 13, "type": "CN_WORD", "position": 10 }, { "token": "机长", "start_offset": 13, "end_offset": 15, "type": "CN_WORD", "position": 11 } ] } 修改文件看看能不能热更新 新增杨力生这个单词,前后对比 (不用重启es) POST 54288.top:9200/_analyze { "analyzer":"ik_max_word", "text":"黄逸飞和谭林超还有杨力生一起去看中国机长" } { "tokens": [ { "token": "黄逸飞", "start_offset": 0, "end_offset": 3, "type": "CN_WORD", "position": 0 }, { "token": "和", "start_offset": 3, "end_offset": 4, "type": "CN_CHAR", "position": 1 }, { "token": "谭林超", "start_offset": 4, "end_offset": 7, "type": "CN_WORD", "position": 2 }, { "token": "还有", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 3 }, { "token": "杨", "start_offset": 9, "end_offset": 10, "type": "CN_CHAR", "position": 4 }, { "token": "力", "start_offset": 10, "end_offset": 11, "type": "CN_CHAR", "position": 5 }, { "token": "生", "start_offset": 11, "end_offset": 12, "type": "CN_CHAR", "position": 6 }, { "token": "一起", "start_offset": 12, "end_offset": 14, "type": "CN_WORD", "position": 7 }, { "token": "一", "start_offset": 12, "end_offset": 13, "type": "TYPE_CNUM", "position": 8 }, { "token": "起", "start_offset": 13, "end_offset": 14, "type": "COUNT", "position": 9 }, { "token": "去看", "start_offset": 14, "end_offset": 16, "type": "CN_WORD", "position": 10 }, { "token": "看中", "start_offset": 15, "end_offset": 17, "type": "CN_WORD", "position": 11 }, { "token": "中国机长", "start_offset": 16, "end_offset": 20, "type": "CN_WORD", "position": 12 }, { "token": "中国", "start_offset": 16, "end_offset": 18, "type": "CN_WORD", "position": 13 }, { "token": "机长", "start_offset": 18, "end_offset": 20, "type": "CN_WORD", "position": 14 } ] } 查看如下的日志图片,可以看到杨力生被加载进来了 修改 黄逸飞 谭林超 中国机长 杨力生 { "tokens": [ { "token": "黄逸飞", "start_offset": 0, "end_offset": 3, "type": "CN_WORD", "position": 0 }, { "token": "和", "start_offset": 3, "end_offset": 4, "type": "CN_CHAR", "position": 1 }, { "token": "谭林超", "start_offset": 4, "end_offset": 7, "type": "CN_WORD", "position": 2 }, { "token": "还有", "start_offset": 7, "end_offset": 9, "type": "CN_WORD", "position": 3 }, { "token": "杨力生", "start_offset": 9, "end_offset": 12, "type": "CN_WORD", "position": 4 }, { "token": "一起", "start_offset": 12, "end_offset": 14, "type": "CN_WORD", "position": 5 }, { "token": "一", "start_offset": 12, "end_offset": 13, "type": "TYPE_CNUM", "position": 6 }, { "token": "起", "start_offset": 13, "end_offset": 14, "type": "COUNT", "position": 7 }, { "token": "去看", "start_offset": 14, "end_offset": 16, "type": "CN_WORD", "position": 8 }, { "token": "看中", "start_offset": 15, "end_offset": 17, "type": "CN_WORD", "position": 9 }, { "token": "中国机长", "start_offset": 16, "end_offset": 20, "type": "CN_WORD", "position": 10 }, { "token": "中国", "start_offset": 16, "end_offset": 18, "type": "CN_WORD", "position": 11 }, { "token": "机长", "start_offset": 18, "end_offset": 20, "type": "CN_WORD", "position": 12 } ] } ```  很赞哦! (2) 上一篇:ELK搭建和tomcat日志分析 下一篇:logstash-input-jdbc 目录 点击排行 Elasticsearch6.3.2之x-pack redis哨兵 2019-07-09 22:05 Redis+Twemproxy+HAProxy+Keepalived 2019-07-12 17:20 GC优化策略和相关实践案例 2019-10-10 10:54 JVM垃圾回收器 2019-10-10 10:23 标签云 Java Spring MVC Mybatis Ansible Elasticsearch Redis Hive Docker Kubernetes RocketMQ Jenkins Nginx 友情链接 郑晓博客 佛布朗斯基 凉风有信 南实博客 Rui | 丁D Java研发工程师 生活可以用「没办法」三个字概括。但别人的没办法是「腿长,没办法」、「长得好看,没办法」、「有才华,没办法」。而你的没办法,是真的没办法。 请作者喝咖啡